I PURCHASED ADOBE Photoshop CS3 a number of years ago for one and only one reason: color management.

I was the owner of a

Minolta Dimage 7 camera, and was greatly disappointed in its photos. Naturally, I

blamed the camera and had huge buyer's remorse. One of the many problems was that the camera took photos in a non-standard colorspace. According to the camera review linked above:

Looking over the D7's images I couldn't help but feel that certain colours seemed under-saturated (mostly greens and blues)...

At this stage it was clear to me that the DiMAGE 7 was shooting in its own colour space...

This is not documented in the DiMAGE 7 manual. I feel it should be made very clear to users, there's certainly a chance that the average user will simply load images directly from the camera using a card reader and never use the Minolta Image Viewer. These users may well end up disappointed by the D7's colour.

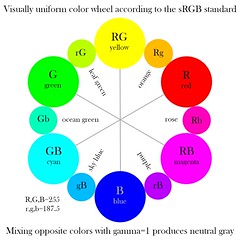

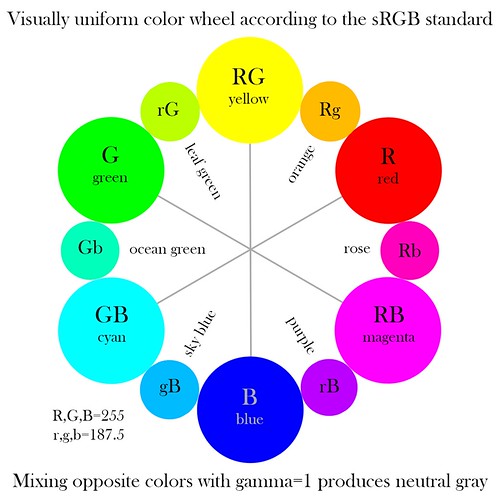

The average user won't know what colour space they're in, indeed most users don't even calibrated their monitors. However, at a consumer level, most of you will be viewing this web page and all the digital photographs you ever deal with in the sRGB colour space.

I admit that at the time I didn't know much about color spaces (and really I still don't). My Dimage images looked poor for various reasons, and the processing utility that came with the camera produced very dark, unacceptable images. Despite doing some research, I really didn't know how to correct for these problems, most notably the color space problem.

Then at Christmas 2006, I received a copy of Adobe Photoshop Elements. By that time I had already purchased a newer, somewhat inferior camera, but it produced great images right out of the box. But I tried using the Adobe Camera RAW software in Elements to process my old Dimage photos —

and behold! — they looked great. The Adobe software knew all about the Dimage color space problem and correctly converted my images to the Web standard sRGB colorspace, and solved other problems too. By the next summer, I upgraded to Photoshop CS3 to gain access to the more advanced features in Adobe Camera RAW.

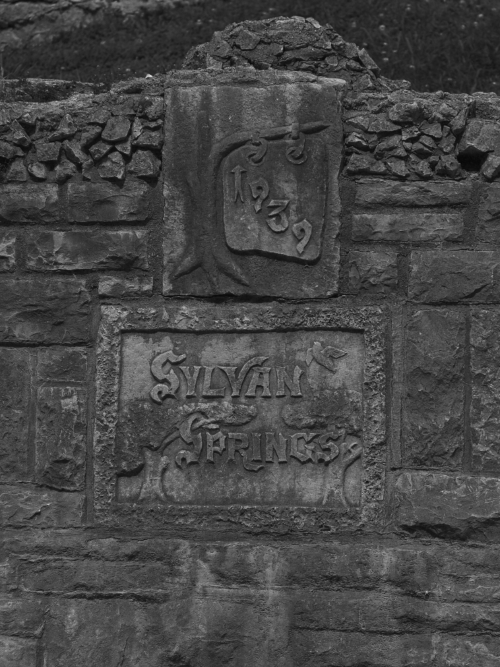

To the left is a typical JPEG image I got from the Dimage. On the right is an image I shot in RAW and processed with ACR.

So I was a new owner of Photoshop. But I didn't know how to use it much beyond the excellent RAW conversions. Many of the functions seemed to be useful for only producing odd special effects; although I didn't know it at the time, I was using a chain saw instead of a scalpel.

For example, I thought the Curves and the Channel Mixer functions were only used to produce special effects as seen here. Perhaps you can, but that is neither the intent of the tools nor is it their highest and best use. In the bottom image, I randomly adjusted the sliders in Channel Mixer.

Thanks to Dan Margulis' excellent book,

Professional Photoshop, Fifth Edition, I learned a few tricks on the right use of some of the Photoshop tools. For example, Dan showed how to brighten green foliage using Channel Mixer:

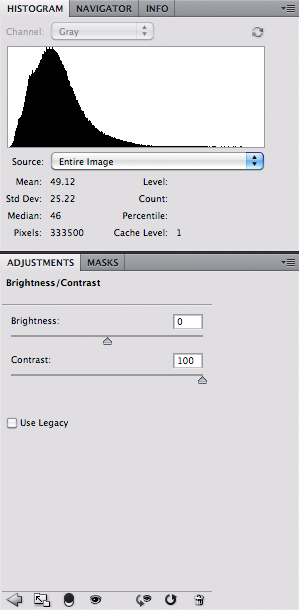

Following are the adjustments:

Searching around the web, I see that most people use the channel mixer as a kind of mysterious saturation or desaturation tool. Above we see how it is used to brighten a color. It is often used as a black and white conversion tool: all you need to do is check the 'Monochrome' box and adjust the sliders until you get the contrast you desire in your conversion.

For a while I've been interested in the

Purkinje effect, which describes the shift in colors we experience as light gets dimmer. I was prompted to do this in response the the poor quality of photos I took around dusk: how, I thought, could I reliably and repeatedly correct my photographs to look something like I remember seeing it? My earlier efforts at coming up with a Purkinje correction are

here and

here.

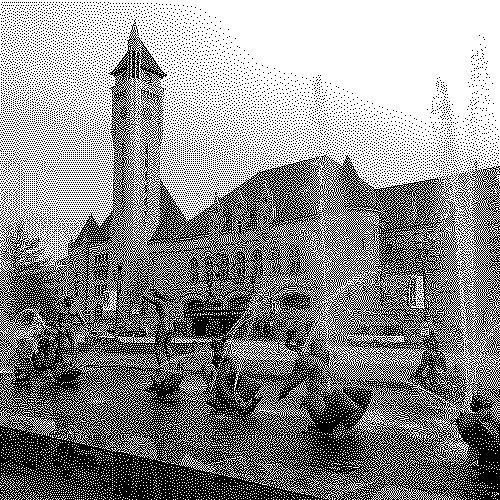

This correction is a two-step process. First I adjust the relative tonalities of the colors in the scene. When light gets darker, the eye becomes more sensitive to blue-green light, and simultaneously gets less sensitive to red colors. In particular, foliage has a strong red color component in daylight which makes them appear yellow-green; under dim lighting, foliage looks relatively darker. I also noticed that I had to adjust the white balance towards a sky blue color.

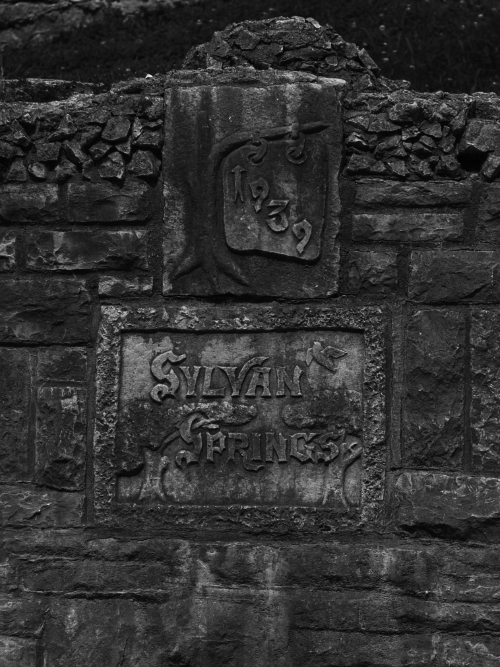

In the scene above, I distinctly recall the foliage being quite dark, while the fountain (seen in the lower right corner), the limestone edging on the sidewalk, and the columns appeared much brighter than the surrounding areas. In a Luminosity layer, I used the channel mixer to both boost the brightness of the blue channel and to darken the red channel:

I could not just use this channel mixer indiscriminately across the image; the yellow light in the window in the tower was also darkened unrealistically. In this image, I applied the channel mixer with masking so that it did not change the brightest tones in the image.

I got these numbers in the channel mixer by comparing charts of light response of the dark adapted eye versus the sRGB primary color response to light. Measuring the response values across the color spectrum and doing some statistics, I was able to determine which mixture of color components best simulated the eye's night-adapted response. Here we are getting very close to the intended use of the channel mixer.

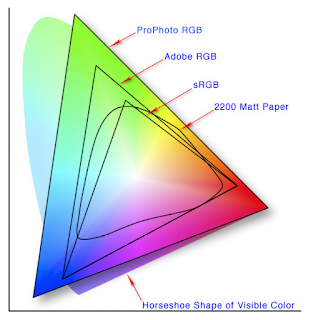

The most precise use of the Channel Mixer is for converting between various RGB color spaces. Please consider how I started the article: my old Dimage camera had an odd color space, which if not handled properly, would produce disappointing images and poor color rendition.

Every digital camera has a native color space, and it isn't the Internet standard

sRGB or Adobe RGB. Every brand of digital camera has a native color gamut that is

whatever. The electronics within the camera do the conversion between the camera's native color space and sRGB; or, if you shoot in RAW, the software on your computer (like Adobe Camera RAW, Lightroom, or Apple Aperture) does it for you after the fact. Digital cameras are sensitive to the various colors in a manner which is quite unlike the human eye's response. Practically speaking, a digital camera is limited by cost, manufacturing technique, and long-term stability; while the human eye is precious beyond cost, has the most complicated manufacturing known, and repairs itself. Therefore it is unrealistic to expect that cameras will see color precisely as is seen by human eyes.

As it turns out, it is very difficult to get precise color capture capability on a digital device, and

forget about trying to capture color precisely as the human eye sees it, especially with exceptionally pure colors. (Technically speaking, digital cameras do not meet the Luther-Ives Condition, which determines if a camera can capture colors accurately.) There are

cameras that do have better color rendition than consumer or pro-level cameras but a) you can't afford them, b) you don't want to spend the time getting them to work right, c) these cameras only work in controlled laboratory conditions, and d)

you won't be able to display your accurate colors on a computer monitor or print them on a printer. So we are looking for

good enough colors.

So we take a digital camera that has a color space of

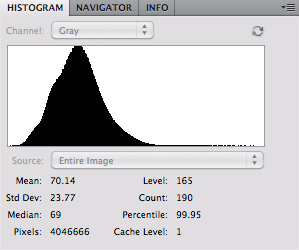

whatever, and we use the computer to convert the images to a standard color space like sRGB. Here is an example photo taken with my Nikon D40. I shot this X-Rite ColorChecker Passport in daylight using RAW capture. I set the white balance using the neutral card in the bottom photo. Here I used Nikon View NX2 software to convert the RAW image to JPEG in the sRGB color space:

I held up the target in daylight coming through my office window, and compared it to this image on my screen. The colors look pretty accurate. But this is not the image as the camera captures it. There is some significant processing going on to produce this final image. Here is an approximation of how the camera

really sees the target:

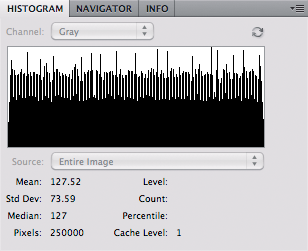

I used the free and excellent RAW Photo Processor for Mac OS X. I set the white balance to UniWB, set the gamma to 1.0, and saved the image as raw pixels without a color profile loaded. By looking at this image we can see that the camera is very sensitive to green light, less sensitive to blue, and not very sensitive to red light. All cameras have a fixed, native white balance which does not correspond to any common lighting condition. Because digital cameras capture more than 8 bits per channel — usually in the range of 10 to 14 bits per channel — these extra bits are used to prevent banding artifacts when the software does this kind of processing. For daylight, the camera must amplify the blue channel by about 50% and approximately doubles the signal in the red channel. Under incandescent lighting, the red and green channels are usually fine, but the blue channel must be boosted strongly, which typically causes lots of noise.

Let's do a white balance. I used RAW Photo Processor to apply the white balance which I captured when I took the photo:

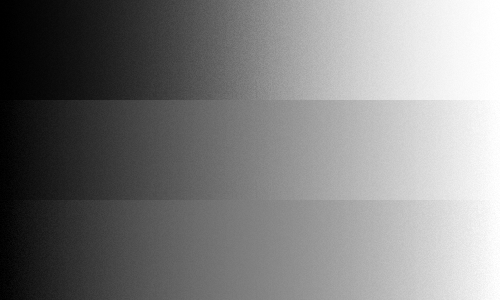

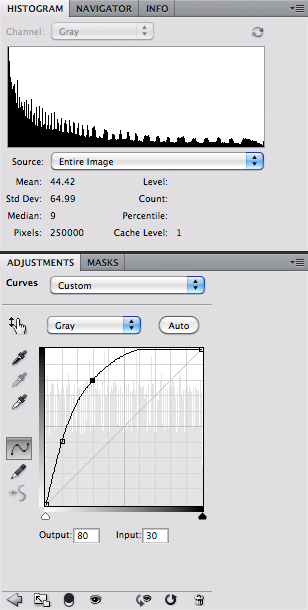

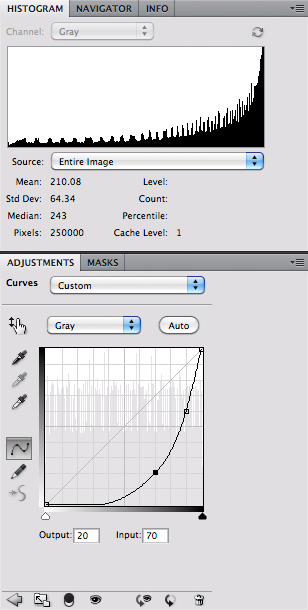

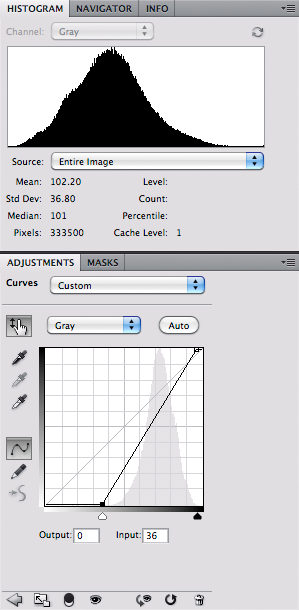

Cameras don't see light like humans do. In a camera, twice the light intensity generates twice the RAW signal; with humans, doubling the intensity of light makes a scene look only

somewhat brighter. Likewise, halving the intensity of light only makes a thing look

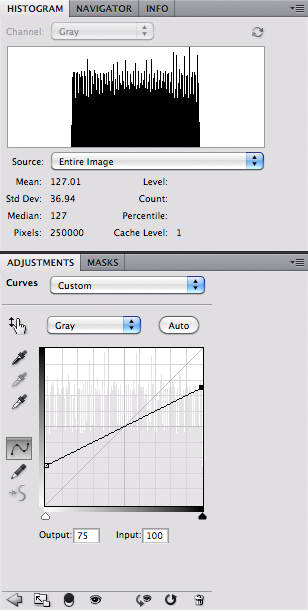

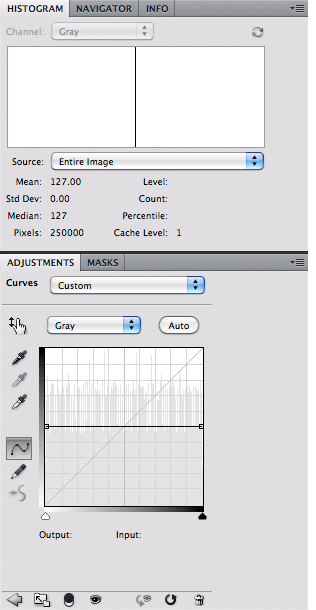

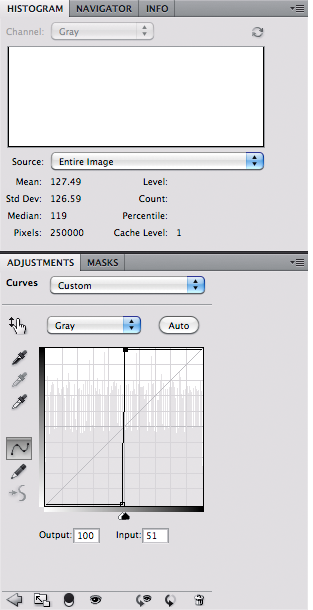

slightly darker. And so digital images are designed to provide extra sensitivity to the darker tones at the expense of brighter tones; this will allow us to more efficiently use our image data by allocating more data to the important midtones. To correct for this, we have to brighten the midtones in the camera's image, while keeping the very darkest and brightest tones unchanged.

Here I applied a brightness (or

gamma) correction in RAW Photo Processor. This is not the precisely same brightness curve as is found in the sRGB standard, so the tonality of this image doesn't quite match the Nikon-processed image. But it is good enough for our purposes.

The first thing I notice is that the colors are disappointing. They look a bit flat and unsaturated compared to the first image. But this is actually a good thing. It tells me that my camera's native color space — whatever it is — is broader than the sRGB color standard. This flatness tells me that the colors could be potentially much brighter, if only I use a large enough color space, one larger than sRGB. In the camera native color space, the bright red color on our X-Rite target is merely mediocre.

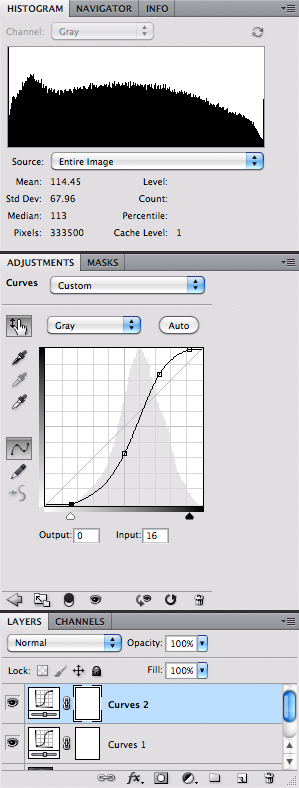

To do this conversion from the camera native color space to sRGB in Photoshop, we imitate the processing used by the camera manufacturers by using Channel Mixer.

Suppose you take a given standard color, and take a photograph of it, being careful to do a good white balance and exposure. Good standard colors ought to produce a particular sRGB color value if exposed correctly; for example, a pure red the same color as the sRGB primary red color ought to give you R=255, G=0, B=0. But since your digital camera uses a different color system, it gives you another value, such as R=200, G=58, B=27. Your digital camera likely has a primary red color that is brighter, more saturated, and more pure than what the sRGB standard can describe.

So what we do is to boost the camera's red value somewhat, and then subtract out any green or blue that happens to be contaminating the red. So for any given Camera RAW value, we can get the equivalent sRGB values.

I got test data from DxOMark, a company that measures performance data for digital cameras and lenses. Here is an excerpt from the dataset:

Go to

http://www.dxomark.com/index.php/Camera-Sensor/All-tested-sensors/Nikon/D40, click on Color Response, and click CIE-D50. This gives you daylight color data. They also provide CIE-A data, which models incandescent lighting.

Please note the White balance scales: this shows how much we should boost the various RAW color channels to get good white balance under D50 daylight conditions. Please note that D50 is much bluer than midday sunlight at mid-lattitudes, but is a good enough approximation for our use here.

To convert the RAW data to sRGB, we put the DxOMark Color matrix data above into the Channel Mixer:

Please note that due to rounding errors, the numbers in each Output Channel do not total to 100%. Undoubtably this will shift our white balance slightly. Generally speaking, be sure that each channel mixer output channel totals to 100% to avoid a change in white balance. However, the final image looks pretty good:

The colors are fairly close to the Nikon-produced colors.

You can use the same sort of process to do your own camera calibrations under all kinds of lighting conditions. Please note that the DxOMark color matrix data assumes the use of something like the channel mixer to convert from the camera's native color space to sRGB. For example:

sRGB Red = (1.64 x Camera RAW Red) - (0.61 x Camera RAW Green) - (0.02 x Camera RAW Blue)

If you have a target with known sRGB (or other color space) values, you can convert an image to these colors by comparing the delivered colors with the known colors. I use Microsoft Excel to come up with estimates for the channel mixer values, using the

Solver tool found in the

Analysis ToolPak add-in. This is a rather complicated step, and requires some knowledge of statistics.

Please note that this mathematical transformation between color spaces is not exact, but is rather statistical in nature; the conversion matrix merely gives good color conversions

on average. A severe channel mixer setting will also cause much more noise in your image.